Consultant-Tips.Blog

Brought to you by Douglas Herr

MA Introduces New Handheld Device Driving Laws…What About Your State?

It was only a matter of time for us here in MA to start seeing stricter laws regarding phone usage while driving. Massachusetts has passed a new law banning the use of handheld electronic devices while driving, barring looking at text, videos or images while driving. Drivers may continue to use electronics in “hands-free” mode.

The law goes into effect on February 23, 2020 with a grace period for violations until March 31. Penalties will be a $100 fine for a first offense, $250 fine for a second offense and a $500 fine for subsequent offenses.

Exceptions

Drivers will be permitted to use electronic devices under certain conditions, including:

• If you are in a stopped vehicle, and the vehicle is not in a public way intended for travel by motor vehicles or bicycles.

• If you are in or responding to an emergency situation.

• If you are using your device to view a map for navigation purposes and the device is mounted to the vehicle’s windshield, dashboard or center console.

That last one is tricky for those of us who rent cars on a regular basis. I depend on my GPS app everywhere I go, but I don’t carry a mount for my phone. I usually put it in the cup holder. What tricks do you have for this?

Do you know the laws for your home and client states? There are different laws for usage for minors, talking, texting, and public drivers. Kansas, Florida, Missouri, Montana, and Wyoming are the only states with absolute no restrictions. Click here for a complete chart, but don’t assume its 100% accurate.

Please don’t text and drive regardless of the law. Wishing you all a very safe and fun Holiday Season.

Who owns Final Decision on Winter Weather Related Flight Delays?

As Boston prepares for its first winter storm, I thought I would do some research over the holiday weekend on the impact to flights and decisions made based on conditions. I was thinking that there must be some standards to when a decision is made to delay flights due to weather…especially snow storms. My quest was to find out what, and who, determines a delay or cancelation to flights. Let’s take a look at what I found out.

First of all, snow delays occur when the Federal Aviation Administration, the local airport or a pilot decide that the weather conditions are too dangerous for safe travel. The problematic weather may occur at the departure or arrival airport, or en route. A delay may also occur even when your airport has perfect weather. Each commercial airplane makes several trips a day and a previous flight that the plane was scheduled to undertake may have been cancelled or delayed by weather. The Federal Aviation Administration requires every airport that receives more than 6 inches of snow a year to create a snow and ice control plan and a committee to create guidelines for winter operations.

Below is what I found out regarding who decides the impact to flight schedules. It’s interesting to see the different decision makers for each of the types of conditions. Winter weather is broken down into three considerations; accumulation, winds, and ice. There are different impacts to flights for each.

SNOW – The FAA considers a runway to be “contaminated” when standing water, snow, ice or slush are present. Standing water, snow or slush can make it difficult for a plane to take off or land safely as they can cause friction, reducing traction which can lead to hydroplaning/aquaplaning. Landing distances required are different for wet and dry runways, meaning some planes may not be able to land safely on their usual runway when snow is present. Capability of removing snow directly impacts decisions as well as visibility, icing or turbulence problems during flights and landings. The airport determines the conditions of the runway when deciding on flight delays.

WIND – Strong winds can cause visibility issues for pilots even when snow is not falling. While the FAA determines safe parameters for crosswinds during flights, primarily for landings and takeoff, a local airport may need to cancel flights due to blowing or drifting snow. A strong wind might be OK for landings or departures on a sunny day, but when combined with ice may cause problems. Winds from winter storms can be strong and can lead to what meteorologists call “bomb cyclones” or “bombs.” This type of wind can prevent take-offs and landings, or cause extreme turbulence in the air, leading to flight delays.

ICE – While planes can be de-iced if still at the airport, icing is an extremely dangerous weather condition for flying, landing and take-offs. The runways become slick, making safe landings unlikely. Additionally, ice build-up on the aircraft itself can lead to mechanical or functional problems. In-flight icing is a bigger problem for small aircraft, but it can still cause issues on large planes. If freezing rain is occurring, it is likely that flights will be delayed or canceled as ice can build up on the wings, windshields and runways. The pilot often determines the potential impact to the plane and can request a delay based on these conditions.

Many of us are getting ready for several months of winter havoc impacting our travel. Being aware of conditions at your airports and flight patterns may help you make better decisions in advance before heading out. Be prepared, dealing with delays is just a part of our job.

Have a favorite snow delay story? Share your comments below.

Analytics…Are You “Reporting the News” or Offering Actionable Data?

I heard this great quote this week in a presentation and I decided to do some research on options in healthcare analytics. When we provide analytics to our clients we are typically just showing current state outcomes and not necessarily predictive analytics (see below). When considering service line agreements (SLAs), for example, the analytics are to provide evidence of achieving outlined goals. That’s helpful for showing outcomes to targets, but doesn’t really provide evidence of opportunity.

The question really is, how can we provide data that drives performance improvement and is outlining actionable data?

I remember in my Six Sigma training some years back the concept of “Define Measure Analyze Improve Control” process (DMAIC). It’s a concept that any consult can directly apply to your client’s project.

The basics are:

• Define the problem or hypothesis, stakeholders and scope of analysis.

• Measure relevant data and conduct basic analysis to spot anomalies.

• Analyze via correlations and patterns, provide key visualizations.

• Improvement based on insights and showing several options to explore.

• Control the change by monitoring agreed on Key Performance Indicators (KPIs).

The other thing to consider are the various tools you may have in providing your client with analytics.

• Embedded Analytics – Amp up applications for clients within their EHR and use the data content within the EHR applications. It provides relevant information and analytical tools designed so end users can work smarter and more efficiently in their various modules. Epic does this very well through all kinds of reporting options, I’m sure Cerner and MEDITECH have comparable capabilities.

• Predictive and Prescriptive Analytics – Sharpen insights and improve accuracy of decisions on actions to be taken based on reporting. Data analytics leads naturally to predictive analytics using collected data to predict what might happen. Predictions are based on historical data and rely on human interaction to query data, validate patterns, create and then test assumptions. I saw a great presentation by my company that offers this in our DMA products….very cool.

• AI-Assisted Analytics – Offer a smarter user experience with search, voice, and narration options. AI is a combination of technologies, and machine learning is becoming ever more popular in healthcare. AI machine learning makes assumptions, reassesses the model and reevaluates the data.

As a consultant we may be faced with variations of client requests when it comes to tracking performance, providing data on deliverables, and/or showing performance improvement trends. I think we need to make sure we are tracking those areas of true value and reporting on them in a more progressive future state goal driven manner vs current state monitoring that just shows minimal achievements. Did I just write that? LOL. That is a mouthful, but I think it makes sense and aligns with the quote I heard around ‘news vs actionable’ data.

What are your thoughts on driving actionable data? Have any successes in this area? Share your comments below.

NY Times Article Re: Epic…Clinical Adoption Failure

While attending a series of CHiME keynote speaker presentations this week, I found one physician’s views and materials extremely interesting. Certainly worthy of further discussion for those of us in the EHR consulting arena. Before diving in, let me say that overall I thought the presentation was a positive insight of the impact of AI on patient care and quality. Reviews and commentary on fantastic technologies in a wide range of apps and wearables impacting patient care and data management. Great slides, videos, and statistics to back up the overall theme.

However, there was one slide that possibly inadvertently placed blame regarding physician fatigue, frustrations, and burnout on EHRs, specifically Epic. The slide I am referring to showcased last week’s article from the NY Times about a physician’s experience with Epic.

If you have not read the article, Our Hospital’s New Software Frets About My ‘Deficiencies,’ I highly encourage you to do so. Here is the link: https://www.nytimes.com/2019/11/01/health/epic-electronic-health-records.html. You’ll no doubt find the articles spin comical and witty, but it drives an underlining message of EHRs shortcomings and failings due to disconnection with end user (physician) needs.

The presentation at CHiME showed one quote of the article in a slide…

“Who is Epic? I try to imagine. Perhaps a clean-shaven man who wears square-toed shoes and ill-fitting business suits. He follows the stock market. He uses a PC. He watches crime dramas. He never bends the rules. He lives in a condominium and serves on the board of directors. He rolls his shirts into tubes and arranges them by color in his drawers.

When you bring cookies to work, he politely declines because he is on a keto diet. He sails.

And he doesn’t know his audience.”

Like I mentioned, witty for sure, but negative undertones suggesting Epic is an uncaring, insensitive vendor who doesn’t understand physician needs. One colleague of mine wrote me a series of questions that as a consultant, we should all ask ourselves and our clients regarding these concerns highlighted in the article. With his permission, I wanted to share those questions that need answers, or certainly some thought.

1. Why did she have deficiencies on day 1? Was it a build issue or did they choose to bring legacy deficiencies forward?

2. How is it that she did not know what a chart deficiency was?

3. Did the health system run provider personalization labs or sessions in the physician’s lounge? If so, did she attend them?

4. Did she have exposure to EMRs in Med School?

5. Was she really so concerned about the distance that at the elbow support would have to walk to get to her that she didn’t request assistance, or would she prefer to curse the darkness instead of lighting a candle?

6. Why did she assume that clinical users are a homogeneous audience and that she typified them?

7. How much of her article had she composed, in her mind if not on paper, prior to her hospitals go-live?

8. What does she think about data-driven clinical decision making?

While I have to agree that the overall article attempts to bring issues to light, the approach is all wrong and the author takes zero ownership. The questions above hit to nail on the head. Another question we need to ask, did the San Francisco based hospital invest in clinical transformation and adoption planning? I’m guessing not. I really liked the question around personalization and training as well. All three of these areas (transformation, training, and personalization) tend to be overlooked during implementation…or not invested in at a level to tackle concerns highlighted by writer of this NY Times article.

Perhaps there is an overall negative association with EHRs regardless if Epic, Cerner, Allscripts, or Meditech. Perhaps it is not the actual functionality, system capabilities, or workflows that are the issue, but the disappointing position associated with physician dislike (and distrust) of EHRs. Another interesting quote from the article…

“Hence the hospital’s decision to switch to Epic, commonly viewed as the least imperfect of several imperfect electronic health record systems on the market.”

We probably see these clinician frustrations at our client sites regularly. As consultants we have a huge opportunity to continue to focus on clinical adoption of all IT investments, including non EHR areas. The primary talking point of the presentation was AI. If clinicians are having these types of issues with EHRs, where is their adoption and utilization going to be with other technologies? Every project we support has end user facing impact. Watching the presentation and reading this article only reinforces to me top consulting duties including; mitigating concerns, impacting utilization, and assisting with successful EHR adoption.

Have you faced clinical adoption issues? Thoughts on the NY Times piece? Share your comments below.

SLA vs KPI…What Truly Drives Performance Improvement

While reviewing a proposal request this week I started thinking about areas that drive success for our customers. I mean true measurable performance based outcomes that translate to system utilization improvements, end user satisfaction, and true return on investment. An industry trend continues to be a focus on Service Line Agreements (SLAs). To me, that’s a minimum standard of services and doesn’t really drive success, it simply meets the minimum requirement. As a consultant, we are always striving to exceed expectations, so why don’t we showcase Key Performance Indicators (KPIs) vs SLAs?

Let’s back up and first define the difference between the two.

Service Line Agreements (SLAs) – According to Wikipedia, a SLA is a contract between a consulting firm and their customer that documents what services the firm will furnish and defines the service standards the firm is obligated to meet. In the case of the proposal, SLAs are agreements on response times to answer calls, resolution times to complete tickets, and ticket request approval/escalation process agreements.

Key Performance Indicators (KPIs) – According to Oxford Dictionary, KPIs are a quantifiable measures used to evaluate the success of an organization, employee, etc. in meeting objectives for performance. To me, KPIs provide a way to measure how well client and/or projects are performing in relation to their strategic goals and objectives.

With those definitions I think it’s clear to say that SLAs define requirements on delivery and KPIs are measures to show improvement over time on the delivery.

As a consultant you should be aware of the SLAs. These are contractual agreements on the services to perform. We should not stop there. We should then take it to the next level and ask if we are also providing KPI trackers to showcase improvements and ROI for the customer…and if not, work with the client in showing the value of also creating KPIs.

When working with a client on creating KPIs, remember that the operative word in KPI is “key,” because every KPI should be related to a specific business outcome with a performance measure. KPIs are often confused with business metrics. Although often used in parallel, KPIs need to be defined according to critical or core business objectives with ability to show actual return on investment (ROI).

While sales may sometimes lead this effort as part of a proposal, I find more often we only provide SLAs in the contract. Here is a huge opportunity as a consultant to add value to the efforts we are providing.

Areas to consider when creating KPIs:

- What is the client’s desired outcome?

- Why does this outcome matter to them?

- How are you going to measure progress?

- What resources are needed to achieve the outcome?

- How will you know you’ve achieved the intended outcome for your client?

- How often will you review progress towards the outcome?

As an example, let’s say your objective is to decrease wait times on your Epic Help Desk tickets post go-live. You’re going to call this your Help Desk KPI.

From here agreements would include:

- To decrease ticket resolution time by 20%

- Progress will be measured as an assignments of ticket type, “Tier 1 or Tier 2” and the amount of time spent to resolve the issue

- By hiring an outsourced company (your firm) there is an expectation of a reduction of escalated tickets to the application team. We can measure this as well.

- Outcomes can be reviewed on a weekly, monthly, or quarterly basis.

Now the client will have a contract with SLAs that show the minimum requirements to cover help desk calls, plus they will have KPIs that show improvements in ticket management and resolution. It’s truly a winning combo to provide both to clients.

Have you worked with clients in creating KPIs in addition to already agreed on SLAs? Share your thoughts and comments below.

Clickbait…Silly Headlines that have Consequences of Clicking

Let’s face it, Facebook is filled with crazy time consuming crap that for some reason finds many of us just clicking away for hours at a time. I realized I spend an average of 3 hours every Saturday and Sunday morning just clicking away on crazy animal videos (Vox), people bashing site (Passenger Shaming), taking silly tests (What Disney character am I?), or fact checking fake news (Fox News) while sipping my coffee.

What I also noticed as of late is while clicking away, my email box is filling up with endless advertising and spam. Now I need another hour to clean out my inbox. It’s called clickbait folks, and there are consequences to each and every click beyond just having to read endless advertising…it impacts your email as well.

Some of the silliest sites that love to trap you into clickbait include; Faves, Rare America, Buzzfeed, Nameless Network, Tip Hero, Super News TV, MagiQuiz, and the list goes on and on. But the most ridiculous part of all of this is the titles and images used that entices us just enough to click. Here are the top ten that I had to see to believe from this week.

“A Lot of Commercial Pilots Don’t actually Know How to Fly Planes, FAA Says”

“Gang of 100 Feral Chickens Terrorizing Town”

“Beagles Are 97% Accurate in Sniffing out Cancer in Blood, Per New Study”

“Man Invents Special Glasses That Let Short People See over Tall Friends.”

“Elton John Reveals Richard Gere And Sylvester Stallone Fought Over Princess Diana”

“Construction Worker Shoots Self with Nail Gun, Tries to Pull Nail Out with Pliers”

“Man Hiding Drugs in His Butt Accidentally Shoots Himself in Testicles”

“Bride Puts Dad’s Ashes in Nails So He Can ‘Walk her’ Down the Aisle “

“3 Men Arrested For Sexually Assaulting 9 Horses, a Cow, and Goat and Dogs”

“Homeowner Slammed as Racist For Hanging Halloween Decoration”

Let’s get back to the clickbait issue. Many of these sites that you click on have what is called “harvesting programs” that can identify your information, including your email. There are several tricks to the trade, but another really common one, you actually give your email when taking a test or click “link to Facebook” in order to get into an article or video. Once you do this, it’s all over.

The best advice I could find is to create and use a disposable email address. Once you get up to 999 spam emails, close it and create a new one. Now I can go through my regular email, spam free, but still enjoy taking a test to determine what Avenger character I am most like. Important stuff here for sure!

What’s your favorite clickbait site? Share your comments below.

JR does not appear on my flight reservation…Do I need to be concerned?

I’ve done so much research on this subject over the years after having a couple issues. Most recently at my new job. I’ve read that TSA requires an exact match on the ticket to your ID. However, Delta has never once printed JR on my name, just my middle initial. I rarely use JR anymore as it’s created nothing but problems from credit cards to email to IDs.

My HR department at work did not recognize the space I put between my last name and suffix. I listed my full name as Douglas A. Herr Jr. I knew I was in trouble when my assigned email was Douglas.HerrJR@nuance.com. When it came time to book my first flight, sure enough…issues matching with frequent flyer award number and my travel ID for TSA pre. It took a couple weeks to have HR remove JR and IT to update my email which triggers everything for reservations in Concur. Now it just says Douglas A Herr on my tickets.

Delta merges my first name and middle initial. Every ticket I print says Herr, DouglasA. I’ve asked to have this corrected a million times. If I don’t print the ticket and just use the scan on my phone, no problem. If I use Clear at the airport, not a problem. But if I print my pass and try to go through TSA pre, I get questions.

According to the U.S. Customs and Border Protection agency (CBT) website, you may not receive TSA PreCheck “if your airline frequent flyer profile and reservation do not have your correct first/middle/last name and correct date of birth”. No suffix is required. In fact every site I go to makes no mention of requiring JR.

The real issue for any reservation, credit card application, or other memberships is they don’t provide a space for suffix on the application. I always put Herr followed by a space and then JR. They always drop the JR. My ATM card doesn’t even have JR on it but when I log into my account I see it on my profile.

At this point I’ve decided to proceed with my middle initial attached to the end of my last name and no suffix. With Real ID requirements, I wonder how this might impact all this. I always have my passport with me, so I probably won’t even bother getting that new license. Hopefully I won’t have any issues. Thank goodness my name isn’t Douglas Arthur Herr III. I could only imagine the nightmare!

Do you have a suffix? How do you tackle this issue? Leave your comments below.

5 Tips for Providing References

A couple recent requests for references put me in an awkward situation. One issue was the prior employee had not listed clients or roles that I could identify. The other issue was an unrealistic timely response expectation. Both happened in the same week, so I thought I better write about this as it is that time of year for new engagements to be kicking off.

The final stages of any interview process usually include providing the potential employer with references. Reference checks are common in our industry and should be carefully considered before sending in. The request is likely to provide professional references that can speak to your skill sets and expertise. Most companies use a third party to conduct references rather than an internal HR resource reaching out.

There are some common mistakes candidates make.

Below are 5 simple tips to remember when selecting your references.

1. Use previous supervisors from your firm, not clients – If you list a client, don’t be surprised if they don’t follow up on a request for a reference. Many companies have strict policies and how or when they can provide a reference. As a former consultant, rather than a full time employee, clients have limitations to information they can provide. Using your immediate supervisor from your prior firm is a better option. They’ll be able to speak to all of your engagements, not a single project.

2. Select someone who knows you and your work capabilities – While listing a prior executive of your firm may look good on paper, they may not be able to speak to your skill sets. Most firms have practice managers or project leads. Use those who directly interacted with you during your engagement(s). Don’t limit your list to just prior managers, you can list colleagues as well.

3. Be sure to list your firms name and the clients you supported – I recently was asked for a referral but could not align the candidate with any of the engagements listed. As consultants you may have worked for many firms and even more clients. Link the two so that anyone calling can be clear. Best way is on your resume to list the firm and the engagements under that employer with dates of each project supported, especially if you are a CT or ATE resource.

4. Ask permission to list someone as a reference first – Always take a moment to reach out to the individuals you plan to list for references. A phone call is usually best so you can elaborate on the role you are applying. Any information you can provide will also be helpful to get the best response. Try to obtain information on the company’s referral process. Do they simply call, or do they have an online process? Don’t blind side your references, they may not respond.

5. Use references from work provided within last 3 years – Timely references with work more recently completed will likely result in a more accurate summary of your skills. Listing anything more than three years may not align with your current skills or capabilities and could inadvertently not align with the job you are interviewing for.

Be sure to follow up with those you listed after words to extend your gratitude. As consultants we may have a few different engagements in a year and need to provide references regularly. Keeping in touch with those you’ve identified as ideal references will only help expedite the process for future requests.

Have you given or requested references that didn’t work out? Share your comments below.

Why do Recruiters only post the “Region” of the Country for opportunities?

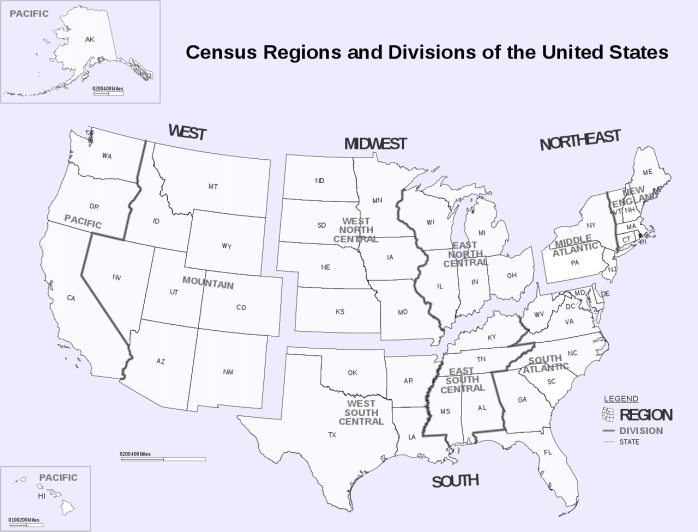

Almost all recruiters provide details on opportunities including a vague description of the actual location of the client. The issue is that not everyone uses the same language to describe the “regions” or states within that region. I was recently asked if I could be more specific than just saying the “Midwest.” It got me thinking, almost all opportunities are spelled out in the cryptic manner. What is the answer to this inconstant use of territories within our country? Let’s start by looking at the variances of regions.

Technically there are five regions of the country including: North East, South East, Midwest, Southwest, and the West. They are broken down as such:

The West Region includes:

– Alaska — Nevada

– California — Oregon

– Colorado — Utah

– Hawaii — Washington

– Idaho — Wyoming

– Montana

The Southwest Region includes:

– Arizona

– New Mexico

– Oklahoma

– Texas

The Midwest includes:

– Iowa — Missouri

– Indiana — Nebraska

– Illinois — Ohio

– Kansas — North Dakota

– Michigan — South Dakota

– Minnesota — Wisconsin

The Northeast Region includes:

– Connecticut — New Jersey

– Delaware — New York

– Maine — Pennsylvania

– Maryland — Rhode Island

– Massachusetts — Vermont

The Southeast Region includes:

– Alabama — Louisiana

– Arkansas — Maryland

– Florida — Mississippi

– Georgia — Kentucky

– North Carolina — Tennessee

Seems simply enough…but, many recruiters use 7 regions instead of the traditional five. These are broken up as:

New England Region

Mid-Atlantic Region

Southern Region

Mid-West Region

South-West Region

Rocky Mountains

Pacific Coastal Region

To make things more complicated when using 7 regions, no one seems to agree on what states belong in what region. For example, the Rocky Mount Region always includes Colorado, Idaho, Montana, Nevada, Utah, and Wyoming. Sometimes it includes Arizona and New Mexico as well (according to Wikipedia). So what the heck does “sometimes” mean? I would like to suggest that all recruiters use the census map…this would put us all on the same page!

Typically there are enough hospital systems within a state that recruiters should be able to be more specific. I may not be interested in opportunity in North Dakota but have no problem going to Indiana. The Midwest is so big, candidates need to know more. My bad for only saying the “Midwest.” Imagine finding out an opportunity identified as Pacific is actually in Alaska. Hopefully that would never happen. Lets not waste each others time. As candidates, ask where is the client within the region you posted? As recruiters, get down to the closest state/city level as you can.

Have you ever inquired about an opportunity with just the region provided as the location? Share your thoughts in the comments area below.

How much “Consulting” do we actually do as consultants?

A candidate recently called me and mentioned that she thought the engagement opportunity sounded more like a temp job than a consulting opportunity. After thinking about it, she might be right, many of our opportunities are like temp staffing placements vs a true consulting role. As I think about our industry and the various roles I’ve filled for clients, I think I’m probably split on roles that were project support roles vs consulting roles. It really beckons the question of whether we are positioning opportunities correctly to candidates. But I also think we need to ask; are we filling temp roles to do the work or providing a higher level of service with a greater potential of deliverable outcomes?

Regardless of whether we are being placed in an analyst, testing, training, or go-live support role…we are being brought in for our expertise and knowledge. Our experience truly warrants a higher level of deliverable than simply filling an empty chair on a team. I always say that our primary responsibility should be transfer of knowledge. As consultants, we provide services that both help our clients hit their goals while also ensuring ongoing success once we depart. If all we are doing is the same work as their full time employees, we are not consulting, nor delivering the quality level of work that should be expected.

With that said, we do need to be aware of the client’s expectations of our role. Overstepping boundaries and self-appointing project work may not align with their needs. I once had a consultant walk into a leadership meeting, uninvited, and starting making suggestions to the group. The IT Director was, needless to say, not happy and asked that consultant be removed from the project. There is a fine line between providing consulting level services and actually “consulting.”

There are tons of engagements that are very much consulting roles. These vary from advisory services, vendor selection, assessments, performance improvement reviews, and project management type roles. While application analysts and builders don’t really fall under these types of services, there may still be opportunity to provide consultation on best practices. Certainly talk with your recruiter about the opportunity you are looking at to clarify the level of “consulting” the client is looking for. Client culture often dictates the type of role needed for the project and whether truly they are just in need of a resource to keep them on time for the project.

I remember my first day as a consultant. The client was going through a design session with Epic. All the consultants sat in the back of the room as Epic walked the client through various functionality and workflow designs for their implementation. A fellow consultant leaned over and told me to just sit in the back and don’t say anything…”they don’t want us to say anything.” I was surprised at this as I’m sure they did not just fly me from Boston to LA to sit in the room and say nothing. Needless to say, I didn’t just sit back and watch, I engaged both Epic and the client in discussion around some suggestions I knew would have a negative impact to the project. Shortly after the client asked me to take on a team lead role. It was a great “consulting” project.

What have you seen at your clients? Share your thoughts and comments below.

Comments